This is a continuation of Part 1, covering some highlights and lessons learned from a refactor of the way the Slack desktop client fetches messages. In particular: architecture lessons learned in hindsight, regressions found while refactoring, the pitfalls of over-aggressive caching, and the downsides of LocalStorage and client-side compression.

Lessons Learned From Refactoring

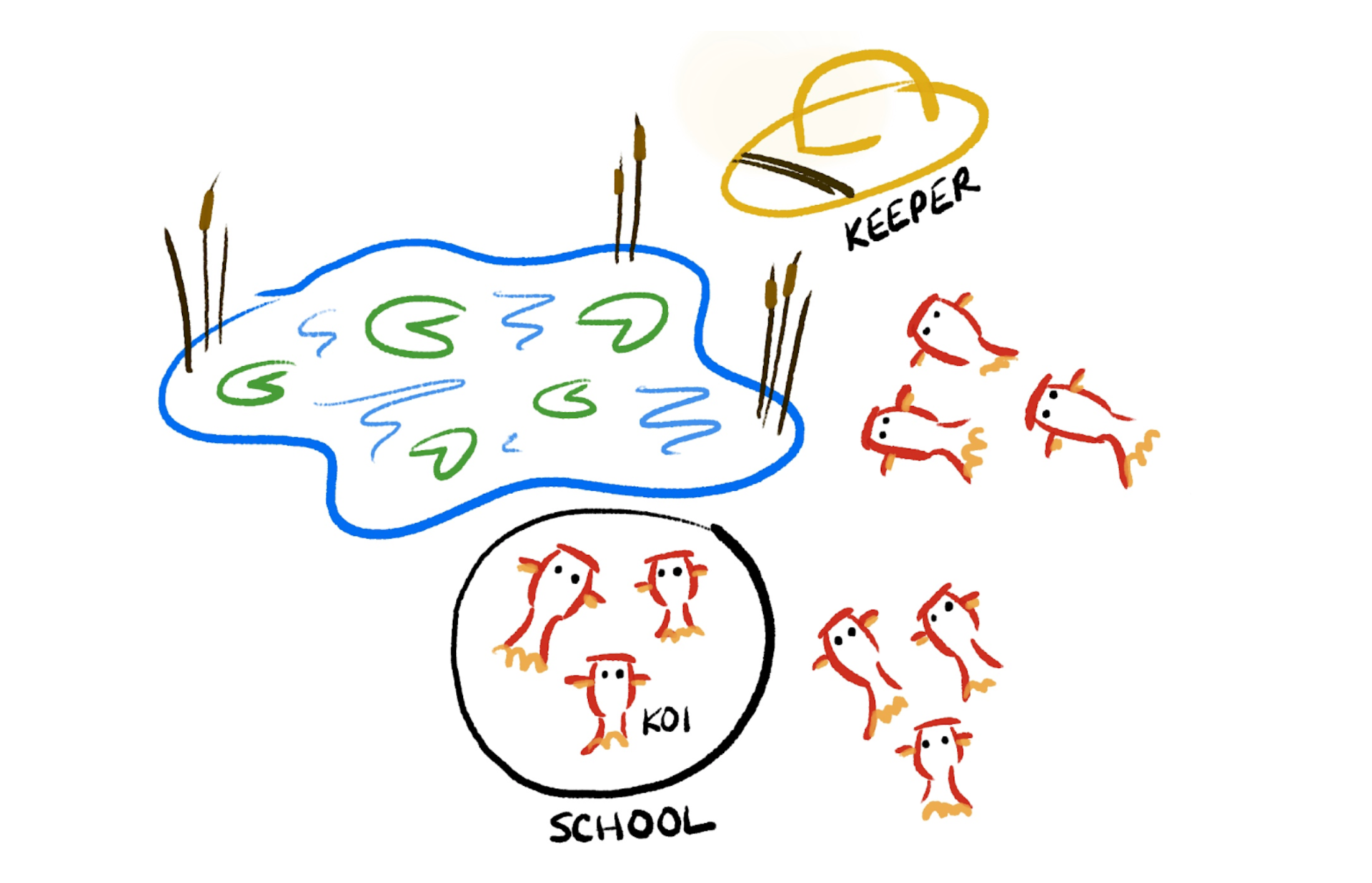

In hindsight, “metadata” API methods like users.counts, which lets us render the channel list state without having to fetch messages for every channel, would have been great to have from the start. Slack was originally built by a small team that envisioned the product being of interest for teams of “up to 150” users, and desktop clients were performing well for teams of that size.

As team sizes and usage grew, Slack’s mobile apps highlighted the need for more efficient ways to fetch state information. The relatively small memory, CPU, cellular network and power constraints of mobile clients drove demand for a new API method — one that could provide just enough data to display the channel list in a single network request, vs. having to query each channel individually.

With mobile driving more efficient ways to fetch state information, we wanted the desktop clients to take advantage of the same improvements. Refactoring the desktop client to call users.counts was trivial. Modifying it further to lazy-load message history was relatively easy; avoiding and deferring channels.history calls did not involve a lot of work. The majority of time spent following the deferral change was making sure the client continued to behave as expected.

By deferring message history, the desktop client effectively had the rug pulled out from under it — the client no longer had a complete data model at load time. Given that the client was used to having all message history before displaying a channel, there were a lot of bugs that cropped up — especially when displaying channels for the first time.

Some of the behaviours affected:

- Auto-scrolling (to the oldest unread message)

- Placement of the red “new messages” divider line

- Display and timing of the “new messages” bar fade-out (“mark as read”) effect

- “Mark as read” behaviour, based on user preferences (i.e., on display of a channel, vs. waiting until the user scrolls back to the red line)

With deferral in place, it became possible to switch to a channel where message history had not yet loaded. A lot of refactoring was needed so the client could properly handle the fetch, display and marking of messages on-the-fly, as well as ensuring the fine details of displaying and scrolling through channels remained unchanged.

“One does not simply cache everything”

At the intersection of Wu-Tang fans and software engineers, Cache Rules Everything Around Me has inspired both branch names and commit messages. While a powerful tool, caching is best applied carefully and in specific scenarios. Not unlike “loading the world up front”, simply trying to cache everything can quickly become expensive, unreliable or unwieldy.

In the case of the Slack desktop client, we were initially caching a lot of client data in the browser’s LocalStorage as it seemed logical to speed up load time and prevent redundant API calls. Ultimately, it proved to be more trouble than it was worth; LocalStorage access was slow and error-prone, and given our new approach of lazy-loading message history in channels, it made less sense to keep this ever-growing long tail of data in cache.

LocalStorage has a number of performance problems and other restrictions which we ran into (and ultimately avoided by reducing our usage of it). We also tried some creative workarounds during the process.

Problems: Synchronous blocking on data I/O

Slack was previously storing client model information for users, bots, channels and some messages in LocalStorage. We would spend time loading much of this data immediately from LocalStorage while loading the client, so we would not have to load much from the network.

LocalStorage read/write is synchronous, so it blocks the browser’s main thread while it works. In some cases, the client could be effectively blocked for several seconds while many large key/value pairs were read from disk. In the worst case, fetching this data over the network could be faster than fetching it from LocalStorage. At the very least, the network request always has the advantage of being asynchronous.

Problems: Storage size limitations

LocalStorage typically has a top limit on data usage, ranging between 5–10 MB per domain. There is also a per-key size limit, which varies by browser.

Like a hard drive running out of space, there is a risk that some, but not all key/value pairs could be written successfully to LocalStorage when performing a series of writes. This introduces the possibility of leaving cache in a corrupt, incomplete or otherwise invalid state. This can be largely prevented by catching exceptions thrown on write, and even reading back the data written to LocalStorage if you want to be absolutely certain. The latter introduces another synchronous I/O hit, however, making writes even more expensive.

Problems: Occasional database corruption

Once in a blue moon, LocalStorage will fail in strange and spectacular ways. The underlying storage data file on disk used by the browser can occasionally become corrupted by a power outage or hardware failure, leading to NS_ERROR_FILE_CORRUPTED errors when attempting LocalStorage reads or writes. If LocalStorage becomes corrupted in this way, the user may have to clear cookies and offline storage, reset their browser’s user profile, or even delete a .sqlite file from their hard drive to fix this issue. While rare, this is not a fun exercise for a user to have to walk through.

Added complexity: lz-string compression (and web workers and asynchronicity, oh my!)

Given the variation of storage size limits for LocalStorage across different browsers, we adopted the lz-string library so we could write compressed data to disk. Because lz-string could operate slowly on very large strings, the compression was moved into a web worker so as not to block the browser’s main UI thread.

With the extra step of asynchronous compression before writing and the synchronous cost of I/O, our LocalStorage module needed a cache to prevent repeated slow reads, and a write buffer for data that had not yet been compressed and written to disk.

When data was inbound for LocalStorage, it would go to the write buffer first. If another part of our app asked for the same data from LocalStorage during that time, the buffer served as a cache — preventing slow, synchronous fetches of potentially-stale data that also now required a decompression step.

After a period of user inactivity, the pending write buffer was flushed to disk. When flushing, key values were sent off to the compression module, and ultimately written to LocalStorage when the compressed data was returned. At that point, the buffer was cleared.

If nothing else, the only time we would really need to write to LocalStorage was right before the user reloaded, closed their Slack tab or exited the browser (or app) entirely. Our ultimate goal was to persist some data across browser sessions, including quitting and relaunching the browser itself. Even if we attempted a synchronous compress-and-write operation at or before window.unload, there was no absolute guarantee that data would make it to disk.

What didn’t work

Compression was a trade-off; it bought us some time while we worked on reducing our use of LocalStorage, at the cost of some performance and code complexity.

Compressing and decompressing data is not free; both cost memory and CPU time. Unpacking compressed data can cause a spike in memory use, and large numbers of new, short-lived objects created by the process can contribute to increased garbage collection pauses.

Complexity increased in a few ways:

- LocalStorage writes became asynchronous, with compression performed in a web worker. This made writes, and the read of pending writes, non-trivial.

- LocalStorage I/O needed to be more carefully managed with buffers and cache, for consistency (i.e., reading pending writes) and performance.

- “Legacy” clients’ LocalStorage had to be identified and migrated to the new compressed format.

Learning from this, we decided it was better to avoid the extra work associated with increasingly-large amounts of data entirely. Instead, we started working toward a lean / lazy client model which can start practically empty, and quickly fetch the relevant data it needs — avoiding cache entirely, if need be.

Ditching message history from LocalStorage

As teams grew rapidly in size, we found that instead of making clients faster, our use of LocalStorage seemed to cause of some real lag in the Slack UI. There was a cost to the amount of data, and the frequency with which we were doing LocalStorage I/O. The more channels a user was in, the more messages there were, and the more time being spent in synchronous LocalStorage I/O.

Today, we no longer store messages in LocalStorage, but we do continue to store a few other convenience items: text that you typed into the message box but didn’t send, the state of the UI (files, activity or team list opened in the right-side panel), and the like. Dropping messages from LocalStorage — with an eye toward dropping user and channel data — eliminates a significant long-tail use of client resources, in favour of storing only ephemeral data and state created by the local user.

In retrospect, perhaps SessionStorage could have served our needs. We did not make the switch with this project, but it may make sense in a future where we are storing minimal, and mostly temporary, data. While it has similar size limits to LocalStorage, SessionStorage is more ephemeral in nature. It can persist through tab closing/restoring and reloads, but does not survive a quit and re-launch of the browser. We still like to persist a few items (i.e., your UI state) in more permanent storage, but perhaps that could be stored as an account preference on our backend at some point in the future.

Conclusions

Software development often involves a balance of developing simply and quickly for product features, and developing for performance at scale. As you design and build, it’s helpful to identify potential performance hotspots in your architecture for the day when your project — or even just a particular feature of it — suddenly becomes popular with users.

- Assume the volume of objects in your world (e.g., members, channels, messages) may increase by an order of magnitude, perhaps several times over, as usage of your product grows.

- Be ready to retrofit or implement new methods and take new approaches to reduce the amount of data your app consumes, loads or caches.

- Avoid “loading the world” and enjoy being lazy — even if it does require more work up front.

- Avoid using LocalStorage in general.

- Hire really smart people who enjoy working on these kind of things.