Batten down the hatches! The app sandbox is now enabled for all web content. This is a fancy way of saying we’ve dialed up the security of the app. It wasn’t unsafe before, but it’s double safe now.

What is the “app sandbox,” what is it protecting against, and why does it matter? This post attempts to answer those questions, and provides a technical guide for Electron developers that want to bring their app’s security model more in line with Chromium’s. It’s divided into two parts:

- Broadly: what is the sandbox and what attack vectors does it prevent?

- Specifically: how was sandboxing implemented in our Electron app?

Let’s start with an analogy for sandboxing, brought to you by political science.

Checks and Balances

Imagine that you’re designing a government, and you install a leader (call it a monarch or president) at the top of your hierarchy. This president is granted special powers via election, and those powers make their job easier by removing obstacles. Phrased differently: they have shortcuts to alter the system. This is all well and good until a malicious leader begins acting unilaterally to accomplish his goals: creating new laws out of thin air, declaring war over trifles, etc. Worse still, his goals are detrimental to the system and out of alignment with prior leaders.

To handle this case you might add safeguards that limit the damage a bad actor can do. You might start with a clause that allows the leader to be removed in some exceptional cases. You could add your own version of the War Powers Act, forcing them to seek approval from an external committee before taking military action. If all of these were ineffective you might mark the leader untrusted and begin to ignore some of their requests. And that, in a nutshell, is a kind of sandbox. It’s a fundamental shift in assumptions that lets us handle malicious actors within the system.

It’s hard to do at a time like this, but let’s take this analogy back to our problem domain. Recall that Electron is a marriage of traditional web content (Chromium) with some blessed JavaScript that can alter the system (Node). When our app is functioning normally, giving a traditional webpage the ability to write files or create new windows enables the rich functionality that folks have come to expect of a desktop app. But if a bad actor has control of the webpage, via something like a cross-site scripting (XSS) vulnerability, its Node powers can be co-opted for evil:

// Example of XSS in a naïve notifications window

window.store.dispatch({

type: 'NEW_NOTIFICATION',

payload: {

content: `<img src=x onerror="${getPayload()}" />`

}

});

// Since nodeIntegration is enabled, this escalates any XSS

// to remote code execution (RCE)!

function getPayload() {

return `require('child_process').exec(${

'open /Applications/Calculator.app'

})`;

}And you might think, “I won’t pollute the global scope like that,” or even “None of my content uses Node integration.” But don’t underestimate the ingenuity of would-be attackers! Our bug bounty program is filled with clever trapdoors — here’s an example that relied on us overriding window.open.

// Hijack the custom window.open method in our preload

window.desktop.shouldUseNativeWindowOpen = () => true;

window.desktop.window = {};

window.desktop.window.open = () => 1;

// Make a BrowserWindow instance using it

browserWindow = window.open('about:blank');

// Make another BrowserWindow from that, with nodeIntegration!

nodeEnabledWindow = new browserWindow.constructor({

show: false,

webPreferences: { nodeIntegration: true }

});

// Oops, RCE

nodeEnabledWindow.loadURL('about:blank');

nodeEnabledWindow.webContents.executeJavaScript(

`require('child_process').exec('open /Applications/Calculator.app')`

);You might say, “If I were building a website, I’d simply prevent all XSS.” Alas, there are other routes of attack. For example, does your app display an image from the user? Cleverly constructed images can lead to RCE¹, sometimes even those sent to your backend for processing². Even the innocuous task of parsing JSON has been exploited before.³ RCE, like love, is all around us. In an environment with so many potential avenues to defend, how do we keep our users safe? Kenton Varda, at CloudFlare, says it best:⁴

Well, here’s the thing, nothing is secure. Security is not an on or off thing. Everything has bugs. Virtual machines have bugs, kernels have bugs, hardware has bugs. We really need to be thinking about risk management, ways that we can account for the fact that there are going to be bugs and make sure that they have minimum impact.

Submarine designers don’t ignore the possibility of a leak somewhere on the vessel, they make containment essential to its operation. The decision Chromium arrived at–many years ago–was a similar kind of last-resort mitigation.⁵ In the browser landscape, they were uniquely positioned, with a multi-process architecture that separates web content from a trusted orchestrator. In that sense their “containers” were already drawn up. By flipping the assumption of web content from trusted to compromised, and constraining what it could do, they limit the consequences of a worst-case scenario. Electron is a fledgling framework in comparison, but, being based on Chromium, it can leverage these security features. In Electron this capability is available as the sandbox option, under webPreferences.⁶

There are, as always, tradeoffs to consider before enabling this feature. Critically, turning on the sandbox means your renderer processes cannot use Node, or any external module that depends on Node’s core modules (e.g., fs, crypto, child_process, etc.). It also dramatically reduces the surface area of Electron that’s available in the renderer: just about all you can do is send messages to the main process (and as it turns out, that’s all you’ll need). With the new abstractions Electron provides around V8 primitives, you should be able to sandbox your web app without any loss in functionality. How we did that is the topic of the next section.

Breaking the Chain

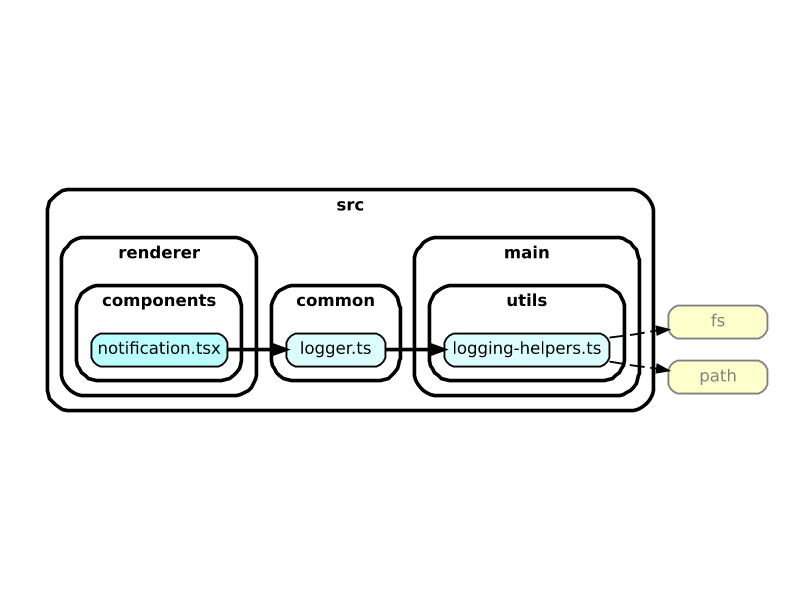

In making the move to sandbox, the first area we stumbled on was the organization of our code. Although we weren’t often referencing Node in our renderer bundles, we had a common folder of utilities that, theoretically, could be shared across main and renderer processes. Over the years, that folder had accumulated all kinds of methods: not just ones that were genuinely reusable. They were often grouped into overly large and ambiguous files: e.g., a logging-helpers file with both (reusable) string utilities and (non-reusable) fs utilities. Renderer code might import one of those files to get at a string utility and take a dependency on fs as a side effect. Untangling this web of dependencies was no small feat, but one tool that made it more manageable was dependency-cruiser.⁷ It let us visualize import chains to see how code was getting pulled in, and, once rearranged, prevented similar imports with validation rules: like a linter for code organization.

Here’s a toy example illustrating the kind of graphs that helped us. In it we see that a renderer-side component is referencing fs and path, from a chain that starts with common/logger:

In practice it was not uncommon to see import chains dozens of files long, with thousands of nodes rendered, but with options like exclude, focus, and doNotFollow you can prune the tree into something legible. An unexpected benefit of this exercise was trimming the size of our JS bundles (particularly the preload, which runs on every page navigation) by relocating unexpected code or removing unused code. After this Marie Kondo maneuver, our folder structure became more representative of the webpack bundles we make at build time, with distinct main, renderer, and preload folders. We used dependency-cruiser rules to restrict “crossing the streams,” that is, having references between bundles. You can even block Node imports with a rule like:

{

name: 'no-node-in-renderer',

comment: 'The renderer process should not use Node built-ins',

severity: 'error',

from: {

path: '^src/(renderer|preload|common)',

},

to: {

dependencyTypes: ['core']

}

}Bridging the Gap

For those making apps that embed web content, you already know the importance of the preload script for extension and customization. It’s your only chance to expose desktop functionality to the page, and to do it safely you’ll need to use context isolation. As a web preference, it’s a cinch to turn on, but once you do you’ll be unable to expose objects from the preload to your guest page–kind of the whole point of using a preload script. So, what gives? Well, a preload script is a type of content script, and, in Chromium, content scripts are as isolated from one another⁸ as my Flatbush mate’s Twitter timeline is from your uncle’s Facebook feed in Minnesota. While undesirable for society, this is a good thing for JavaScript. It means that the script running in a Chrome extension you installed at the behest of a Redditor can’t, for example, redefine JSON.parse in the page you are viewing now. But it’s less convenient when it comes to the scripts running side-by-side in your Electron app: you sure wish they could have an honest dialogue.

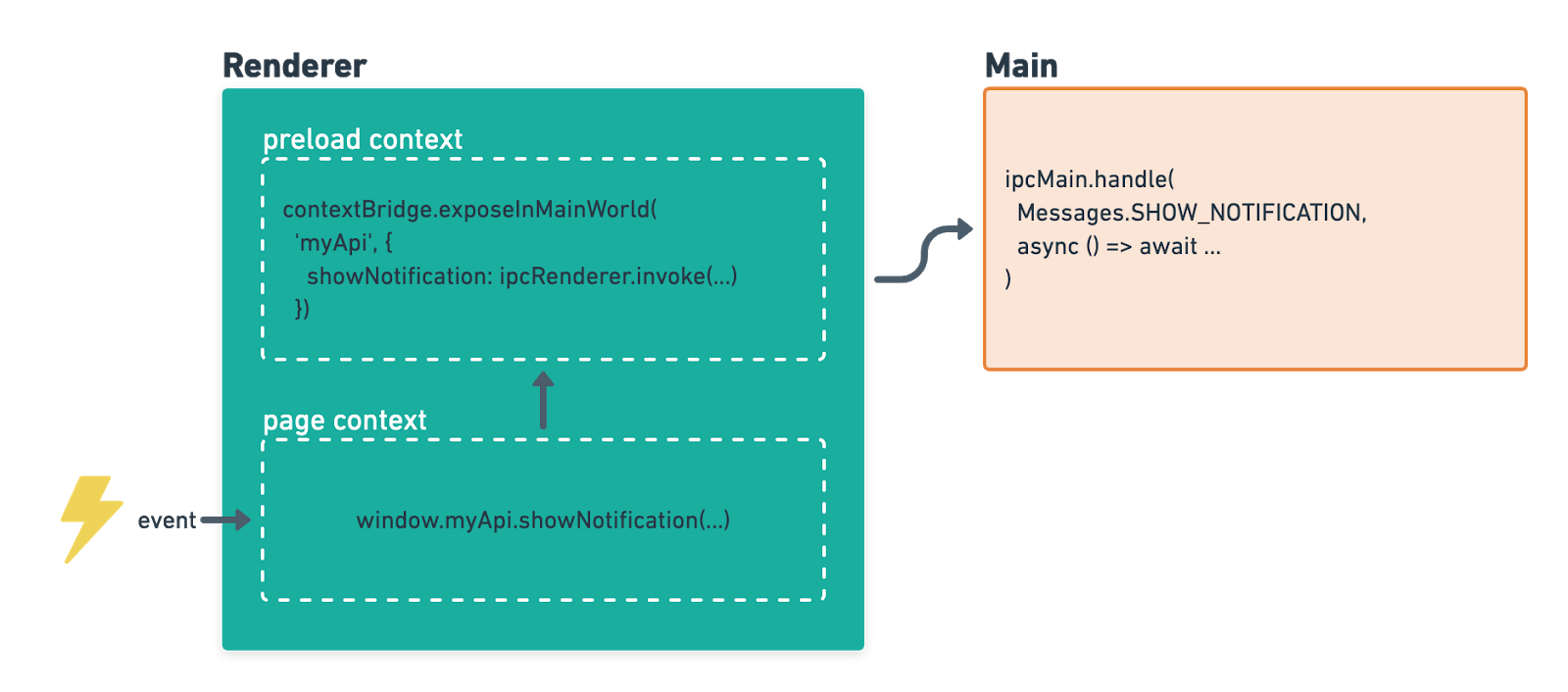

To address this shortcoming, Electron added a new module called contextBridge.⁹ It lets you build a bridge between two isolated worlds–in our case, the preload script and the web app–in the form of a global object. Here’s a quick example to show how these pieces fit together. Say we want to use a Node module to display a notification when an event occurs in the guest page. Prior to the sandbox, we could have used the module within the preload script. Prior to context isolation, we could have exposed myApi to the page by assigning it on the global scope. But in this new world, our setup looks like:

Notice the following changes:

- We use

contextBridgeto create a global in the page context, instead of assigning to window. - Because of the sandbox, all we can do in the preload context is send a message to the main process.

- It’s up to the main process to filter out harmful or unspecified messages.

- If the handler in the main process returns a

Promise, thatPromisewill be marshaled across both boundaries — IPC and the context bridge! In other words, awaiting theshowNotificationcall in-page would represent completion in the main process.

The takeaway is that everything your app could do prior to these security features, you can still do now. It might just take a few more hops. 🐇

We hope this helps you make Electron apps that are every bit as secure as the browser. Sandboxing your app is not easy to do, particularly when carrying over legacy infrastructure: Slack is one of the first collaboration apps to do it. We’d like to thank the researchers who contributed bug reports nudging us in this direction: particularly Oskars Vegeris and Matt Austin. If you work in security and this sounds interesting to you, we’re hiring!

References

- https://www.cvedetails.com/vulnerability-list.php?vendor_id=7294&product_id=&version_id=&page=1&hasexp=0&opdos=0&opec=0&opov=0&opcsrf=0&opgpriv=0&opsqli=0&opxss=0&opdirt=0&opmemc=0&ophttprs=0&opbyp=0&opfileinc=0&opginf=0&cvssscoremin=0&cvssscoremax=0&year=0&month=0&cweid=0&order=3&trc=40&sha=37ea9101e528b7bb9d497af3f08dbffa18a8fe26

- https://imagetragick.com

- https://nvd.nist.gov/vuln/detail/CVE-2014-3188&sa=D&ust=1591641489280000&usg=AFQjCNEvC7KpHTS39qkkSlMeTP5HaX7tNQ

- https://www.infoq.com/presentations/cloudflare-v8/

- https://blog.chromium.org/2008/10/new-approach-to-browser-security-google.html

- https://www.electronjs.org/docs/api/sandbox-option

- https://github.com/sverweij/dependency-cruiser

- https://developer.chrome.com/extensions/content_scripts#execution-environment

- https://www.electronjs.org/docs/api/context-bridge