Slack launched GovSlack in July 2022. With GovSlack, government agencies, and those they work with, can enable their teams to seamlessly collaborate in their digital headquarters, while keeping security and compliance at the forefront. Using GovSlack includes the following benefits:

- Supports key government security standards, such as FedRAMP High, DoD IL4, and ITAR

- Runs in AWS GovCloud data centers

- Enables external collaboration with other GovSlack-using organizations through Slack Connect

- Provides access to your own set of encryption keys for advanced auditing and logging controls

- Allows permission and access controls at scale through Slack’s enterprise-grade admin dashboard

- Includes a directory of curated applications (including DLP and eDiscovery apps) that can integrate with Slack

- Maintained and supported by US personnel

Before the big launch, the Cloud Foundations team spent almost two quarters setting up the infrastructure needed to run GovSlack.

GovSlack is the very first service Slack launched on AWS Gov infrastructure. Therefore we had to spend a significant amount of time learning the differences between standard and Gov AWS and making changes to our tooling and the platform to be able to run on Gov AWS.

In this blog post, we are going to look at how we built the AWS infrastructure needed for GovSlack and challenges we faced. If you’re thinking about building a new service on AWS GovCloud, this post is for you.

How are GovCloud accounts related to commercial accounts?

Not long ago, Slack started moving from a single AWS account to child accounts. As part of this project, we also made significant changes to our global network infrastructure. You can read more about this in the blog posts Building the Next Evolution of Cloud Networks at Slack and Building the Next Evolution of Cloud Networks at Slack – A Retrospective. We were able to utilize most of our learnings into building the GovSlack network infrastructure.

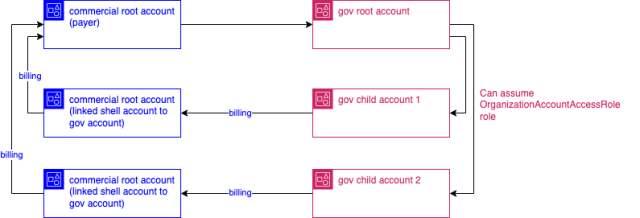

First of all, AWS Gov accounts do not have any billing capability. The resources in the Gov accounts will propagate their billing into a linked shell commercial AWS account. When you request a Gov AWS account, a linked shell commercial AWS account is automatically created. Therefore the first thing we had to do was to request a Gov root AWS account using our root payer commercial account. This was a lengthy process, but not because it was a technically difficult thing to do—it was as simple as clicking a button on our root commercial AWS account. However adding the Gov Accounts to our existing agreements with AWS did take a few weeks. Once we had our Gov root account, we were able to request more GovCloud accounts for our service teams. It’s worth mentioning that GovCloud child accounts still need to be requested using the commercial AWS API using the create-gov-cloud-account call.

When a new GovCloud child account is created, you can assume the OrganizationAccountAccessRole in the child account via the GovCloud root account’s OrganizationAccountAccessRole (this role name may differ if you override the name using –role-name flag).

Let’s look at what are these links look like in a diagram:

As we can see above, all our GovCloud resources costs are propagated to our root commercial AWS account.

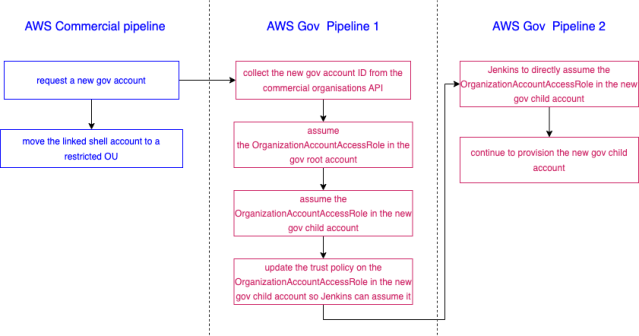

How did we create GovCloud accounts?

As we discussed above, we use the AWS organizations API and the create-gov-cloud-account call to request a new GovCloud child account. This process creates two new accounts: the GovCloud account and the linked commercial AWS account. We use a pipeline on the commercial side for this portion of the process. Then the linked commercial AWS account is moved to a highly restricted OU, so it is blocked from creating any AWS resources in it.

We use a Jenkins pipeline in the AWS Gov partition to configure the GovCloud child account. We can assume the OrganizationAccountAccessRole of the new child account from the GovCloud root account as soon as it is created. However our Gov Jenkins services are located in a dedicated child account. Therefore there is a step in this pipeline that will update the child account’s OrganizationAccountAccessRole’s trust policy, so it can be assumed by the Jenkins workers. This step must be completed first before we can move on to other steps of the child account configuration process.

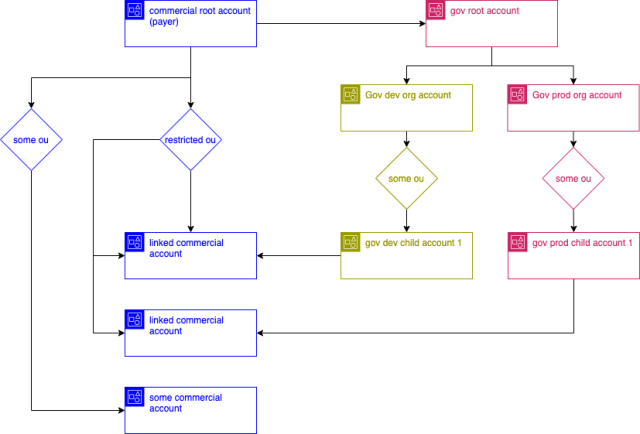

How do we separate GovDev and GovProd?

As mentioned previously, one of the core compliance requirements for a GovCloud environment was that only US persons would be authorized to the production environment. With this requirement in mind we made the decision to stand up two Gov environments, one being the production Gov environment, known internally as “GovProd”, and a second environment, known as “GovDev”. The GovDev environment can be accessed by anyone and test their services before being deployed to GovProd by US personnel.

To ensure we have complete isolation between these environments, we have approached the build out using a full shared-nothing paradigm, which enables the environments to operate in completely different AWS organizations. The layer 3 networking mesh we use (Nebula) is completely disconnected, meaning the networks are entirely segregated from one another.

To archive this, we created two AWS organizations in GovCloud, and under each of these organizations, an identical set of child accounts to launch our services in the Dev and Prod environments.

Is this really isolated?

When a new child account is created, we need to use the Gov root account for assuming the OrganizationAccountAccessRole’s into it for the first portion of the provisioning as we discussed here. Since only US personnel can access the Gov prod accounts, only US personnel are able to access the Gov root account, as this account has access to assume the OrganizationAccountAccessRole in the child accounts. Therefore the initial provisioning of dev accounts also have to run on Gov prod Jenkins, and US personnel are required to be engaged to kick off the initial part of GovDev accounts creation.

Other challenges

GovProd also lacks some AWS services, such as CloudFront and public zones in Route53. Additionally, when we are using the AWS CLI in GovCloud, we must pass in the –region flag or set the AWS_DEFAULT_REGION environment variable with a Gov region as the AWS CLI always defaults to a commercial region for API calls.

Route53 and ACM

Some of our Gov services use AWS ACM for the load balancer SSL certifications. We avoid using email certificate validation as this does not allow us to auto-renew expiring certificates. ACM DNS supports auto-renewal but requires public DNS records to do so. Therefore, we use the same dedicated commercial DNS account for validating our ACM certificates as well. Access to this commercial DNS account is restricted to US personnel.

Route53

AWS GovCloud does not support public Route53 zones. However private zones are allowed. We created a GovDev and Gov Prod DNS account for hosting private Route53 zones. The Cloud Foundations team creates VPCs in a set of accounts managed by us, then we use AWS Transit gateways to connect different regions together and build a global network mesh. Lastly these VPCs are shared into child accounts to abstract the complexity of setting up networks from application teams. You can read more about how we do this in our other two blog posts Building the Next Evolution of Cloud Networks at Slack and Building the Next Evolution of Cloud Networks at Slack – A Retrospective

The private Route53 zones we create are attached to the shared VPCs, so as soon as a record is added to these zones, it can be resolved within our VPCs.

However since GovCloud does not support public DNS, we need to create these records on the commercial side. Therefore, we created a dedicated commercial AWS account for hosting public GovSlack DNS records. Access to this commercial DNS account is restricted to US personnel.

How do we transfer artefacts between commercial and GovCloud?

AWS does not support assuming roles between AWS standard and AWS GovCloud partitions. Therefore we created a mechanism to compliantly pass objects to GovCloud.

This mechanism ensures the objects are pulled into AWS GovCloud partition from the standard partition using AWS IAM credentials. Credentials to access the standard partition for pulling these objects are stored securely on the AWS GovCloud partition.

Terraform modules

We use Terraform modules for building our infrastructure as a collection of interdependent resources such as VPCs, Internet Gateways, Transit Gateways, and route tables. We wanted to use the same modules for building our Gov infrastructure so we can keep these patterns consistent between AWS Gov and standard partitions. One key difference between the commercial and Gov AWS resources are the resources ARNs. Commercial ARNs start with arn:aws as opposed to Gov ARNs start with arn:aws-us-gov.

Therefore we had to build a very simple Terraform module called aws_partition. Using outputs of this module, we can programmatically build ARNs and discover which AWS partition we are in.

Let’s look at the aws_partition module,

data "aws_caller_identity" "current" {}

data "aws_arn" "arn_details" {

arn = data.aws_caller_identity.current.arn

}

output "partition" {

value = data.aws_arn.arn_details.partition

}

output "is_govcloud" {

value = replace(data.aws_arn.arn_details.partition, "gov", "") != data.aws_arn.arn_details.partition ? true : false

}Now let’s look at a example usage,

module "aws_partition" {

source = "../modules/aws/aws_partition"

}

data "aws_iam_policy_document" "example" {

statement {

effect = "Allow"

actions = [

"s3:GetObject",

]

resources = [

"arn:${module.aws_partition.partition}:iam::*:role/some-role",

]

}

}

resource "aws_config_config_rule" "example" {

count = module.aws_partition.is_govcloud ? 1 : 0

name = "example-rule"

source {

owner = "AWS"

source_identifier = "S3_BUCKET_SERVER_SIDE_ENCRYPTION_ENABLED"

}

}VPC endpoints

Over the last three years Slack has been working very hard to make use of AWS’ VPC endpoints for accessing native AWS services in our commercial environment. They reduce the latency and increase the resiliency of our systems, while also reducing our networking costs.

With all these advantages, it’s very easy to assume that it’s a simple move, but one glaring issue that we have found in both the commercial and GovCloud move to VPC endpoints is that AWS doesn’t always support all services in all AZs. Quite often we have found that we need to support the ability for systems to access AWS services both with and without VPC endpoints, which at times can create abstract edge cases that can be hard to account for.

While AWS is constantly releasing these VPC endpoints at a AZ level, we still have not reached 100% of services enabled for 100% of the regions/AZs we run our service in.

AWS-SSO

While we were building out the Gov environment, we started by using IAM users to bootstrap the Gov environment, but this was only ever going to be a short-term solution. AWS recently released the AWS-SSO solution into their commercial environment and even more recently in their Gov environment. As this was a complete greenfield buildout it was a good opportunity to experiment with new technologies and improve our existing implementation.

Unlike AWS’ standard IAM roles, AWS-SSO permission sets are an org-wide global (across the entire org, as opposed to an account) resource, and this changes how we build and deploy them.

Since deploying AWS-SSO in the GovCloud environment, we have taken the learnings and back-ported it into our commercial environment. While we already had an existing SSO system in place for access to the entirety of our commercial AWS environment, using AWS-SSO has made this process a lot smoother and easier.

So what have we learned?

Rebuilding our entire network infrastructure gave us the ability to test our tooling, processes, and Terraform modules, and gave us the opportunity to make improvements. We were able to clean up a multitude of hardcoded values and change things to be more reusable. We were also able to take a step back and have a deep dive into our processes, tools, AWS footprint and gain a greater understanding of our platform as this whole process gave us an opportunity to rebuild Slack from scratch.